The Verge: VR's PC Revolution Moment

"History is much like an endless waltz. The three beats of war, peace, and revolution continue on forever" - Mariemaia Kushrenada, Gundam Wing: Endless Waltz"

The assumption of cyclicality in human endeavors is one that is simultaneously overestimated and underappreciated; yet knowing exactly when to apply the lessons of the past can yield great insight into what comes next.

While its earliest iterations can be traced to Cold War-era academic labs and military research programs, the modern Extended Reality ("XR"; inclusive of virtual, mixed, and augmented reality-based technologies) industry is hardly a decade old - having seemingly been revived from cryogenic sleep by a SoCal-based teenage hacker-turned-entrepreneur. After decades of irrelevance, the emergence of the Oculus DK1 in 2013 jumpstarted a revolution in accessible, consumer(ish)-grade VR devices and associated software for a new generation of users; yet, in many ways, the industry has failed to break off the perception (accurate or not) of being a niche category better suited for enthusiasts and gamers.

This is precisely where the early PC industry found itself in the late 1970s - a quickly evolving industry filled with hackers, tinkerers, hobbyists, visionaries - and very real, lingering questions regarding the case for broader mainstream adoption outside of these circles. Yet the emergence of key productivity software (so-called "Killer Apps") in categories such as spreadsheet, word processing, and database management - along with the entry of the behemoth that once was IBM - drove PC adoption among enterprises and mid-sized businesses in the 1980s.

The importance of the above cannot be overstated - by legitimizing both the PC market and productivity-based use cases, thousands of businesses subsidized PC purchases for employees and thus dramatically expanded a) the total installed base of PCs, and b) the market for consumer PC hardware and software. In turn, this expansion of the PC market would lay the groundwork for the widespread adoption of other computer-based technologies (such as the Internet) in the following decades.

I believe that XR will follow a similar path as the PC industry; in other words, mainstream adoption will be achieved by productivity-enhancing software paired with dedicated, high-quality hardware effectively subsidized by employers - and it's likely that we are close to that turning point.

To better understand why, a bit of historical context is warranted.

Brief History of The PC Revolution

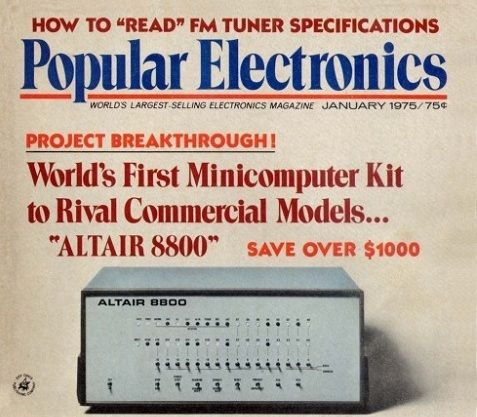

Much has been written on the broader industry developments leading up to December 1974 - but the short version is that Intel's efforts to build microprocessors for demanding clients earlier that decade, resulted in Popular Electronics publishing a cover photo of an empty box built by an Albuquerque, New Mexico-based calculator manufacturer on the verge of bankruptcy (the actual prototype was lost in the mail).

The company in question, Micro Instrumentation Telemetry Systems (or "MITS"), began operations several years earlier - out of the garage of a former Air Force officer by the name of Ed Roberts. Together with a handful of ex-military colleagues, Roberts would focus on producing and selling radio transmitters for model airplanes that were sold via mail order. By the early 1970s, Roberts had bought out his previous partners and shifted his focus to the then-lucrative market for calculators. Semiconductor firms such as Intel & Texas Instruments had radically changed the market for electronics by introducing microprocessors, a critical technology in enabling devices with embedded applications (such as calculators).

Unfortunately for MITS, high demand (and associated margins) for calculators led several semiconductor firms to enter the market and directly compete with former customers. This led to a surge in supply and a collapse in the price of calculators, virtually overnight. By 1974, MITS was in debt and in need of a dramatic change in trajectory. Roberts decided to bet his company on building a microcomputer kit (itself built around Intel's new 8080 microprocessor) that would be sold to technically inclined hobbyists.

Coincidentally, the editors of Popular Electronics (a hobbyist magazine) were actively seeking computer stories to publish, largely in response to a competitor's recent series of articles covering independent attempts to build computers for personal use.

At the time, the computer industry consisted almost exclusively of room-sized mainframes (and somewhat smaller minicomputers) that only large enterprises, major universities, and critical government entities (such as the military & NASA) could afford or have a practical use for. While still untested, there was a general sense that pent-up demand for accessible computers existed among technical enthusiasts who lacked the access or capital to work with existing devices.

Editor Les Solomon, who already knew of MITS as one of the magazine's advertising customers, met with Roberts in the summer of 1974 to discuss the possibility of a cover story. Solomon agreed to the cover story in exchange for Roberts delivering the first prototype. While the first prototype was lost in transit, MITS was able to get a gutted version (little more than a box with blinking lights) just in time for the cover shoot.

The January 1975 edition of Popular Electronics was released with a cover story featuring what would be known as the first, commercially successful microcomputer: The Altair 8800 (priced at $397).

The Altair was scarcely more than a build-it-yourself kit consisting of a box, CPU board (ft. Intel's 8080 microprocessor), 256 bytes of memory, and a panel with lights & switches; peripherals such as terminals and keyboards were not (initially) included. Yet, within weeks of the Popular Electronics cover story, MITS was inundated with hundreds of orders that they could hardly hope to fulfill within the promised 60-day delivery window. The largely untested market demand for microcomputers was larger than anyone realized.

News of the Altair's release reverberated throughout the budding hobbyist community, directly influencing the rise of the Homebrew Computer Club (whose early membership included Apple co-founder Steve Wozniak) in Menlo Park, CA. In Cambridge, MA, the cover story reached the hands of a young Paul Allen, who in turn ran to tell his childhood friend (Bill Gates) about the Altair; by the end of 1975, both would move to Albuquerque to build an Altair-compatible interpreter for BASIC (an early general-purpose programming language) for MITS, and in the process create Microsoft.

Within the next several years following Altair's release, the microcomputer industry would spring into being. Popular magazines such as Byte, Dr. Dobb's, and Kilobaud (later Microcomputing) would serve to educate & inform a growing, yet geographically distributed, hobbyist base. Simultaneously, the Homebrew model would spread to other parts of the U.S., leading to the rise of groups such as the Boston Computer Society. Eventually, a critical mass of interest would lead to the first major industry event - The West Coast Computer Faire. In turn, the community's receptiveness would inform early microcomputer manufacturers, distributors, and retailers of the size of the growing market.

By the end of 1977, three relatively major players emerged: Tandy Corporation (aka Radioshack) with the TRS-80, Commodore International with the PET, and Apple with the Apple II.

The 1977 Trinity, as the three devices would be called, were part of the first microcomputers to a) require minimal assembly and be functional off-the-shelf, and b) be marketed at a broader consumer audience than previous computers. The TRS-80 took the lead due to its inherent advantage in retail distribution, while the Apple II took 3rd place - in part due to its high cost relative to the other two. Despite attempts to present themselves as tools for everyday use, the lack of compelling software (apart from games such as Microchess & Adventure) and varying hardware quality ensured that the market remained niche and concentrated among hobbyist programmers, casual gamers, and a few small business users - at least initially. Apple in particular realized that for their device to be taken seriously, it had to provide serious value beyond entertainment.

During the winter of the following year, a Harvard MBA and his college friend-turned-business partner spent their late evenings coding away in an attic of a house near Boston, MA. The program they were developing was meant to run on an Apple II, but that device's limited developer tools meant using MIT's time sharing (think proto-cloud computing) mainframe computer - and rates were cheapest during the midnight hour. The software they would develop, a spreadsheet program named VisiCalc, would change the trajectory of both Apple and the broader microcomputer industry; it became the first "Killer App."

VisiCalc helped double the Apple II's sales between 1979-1980, and contribute to the device’s perceived value; now it was a true business tool that could dramatically expand productivity. And serious players in the computer industry began to notice.

By the beginning of 1980, the microcomputer industry was shipping half a million devices per year and the total installed base was nearing one million. The advent of productivity software led to devices such as the Apple II increasingly showing up in businesses - then the purview of the mainframe computer behemoth IBM. What started as an indie hobbyist movement was now solidifying into a proper industry, and the timing seemed perfect. William Lowe, an IBM executive who had previously worked on earlier attempts by IBM to create microcomputers, was tapped to create a strategy to quickly and cheaply design a microcomputer within 12 months.

Breaking with IBM tradition, Lowe's plan involved using third-party hardware (such as Intel's 8088 processor) & software; building the product in a skunkworks-esque, multi-disciplinary group detached from IBM's existing bureaucratic structure; and selling the device predominantly through existing retail/dealer networks vs. IBM's traditional salesforce.

In addition, IBM would partner with Microsoft to create the operating system and programming language, a decision that would install Microsoft as the preeminent software company of the computing era (while eventually sealing IBM's own fate). Additionally, IBM would partner with third-party software publishers to ensure productivity-focused utility out of the gate.

On August 12th, 1981, the IBM 5150 would be announced after months of industry speculation and forever change the history of personal computing - beginning by establishing the term PC. By entering the PC market, IBM legitimized what seemed like a passing fad to much of the general public. Within 3 years, the PC market would explode into a multi-billion dollar industry with a 15m+ installed base; by some estimates, >75% of PCs were purchased for enterprise & small business use - driven by three, key productivity-enhancing software categories: work processors (Wordstar, then Wordperfect), spreadsheet software (Visicalc, then Lotus 1-2-3), and database management (dBase II and successor suites).

The IBM PC's success lead to a wave of compatible clones, many of which legally reverse-engineered the PC's BIOS (proprietary firmware) to tap into the growing ecosystem of third-party, yet IBM PC-specific software vendors. This would also have the effect of establishing the PC as the most popular design standard for decades to come. Competitive pressure would also directly influence the creation of Apple's revolutionary (though moderately successful) Macintosh line, and indirectly the rise of desktop publishing.

Widescale adoption of the PC would introduce millions of consumers, via the workplace, to the power of personal computing - which had the effect of expanding the market for home computers; this was complemented by deepening, PC supply chain networks that would reduce the costs of manufacturing components. Within the next couple of decades, personal computers would become ubiquitous, and impact virtually every segment of the economy while laying the foundation for mainstream adoption of the internet, and the later rise of smartphones.

All from a build-it-yourself box shipped via mail order from Albuquerque.

Note: For a more detailed account of the PC Revolution, look no further than Fire In the Valley: The Birth and Death of the Personal Computer.

Virtual Reality Resurrected

Three decades after the IBM PC was released, a homeschooled teenager with a proclivity for hardware modding would spend his days deconstructing old head-mounted displays ("HMDs") and sharing his findings with fellow enthusiasts on MTBS3D (an online forum focused on 3D gaming). From the garage of his parents’ home in Long Beach, California, a young Palmer Luckey would dream of building a virtual future by studying the very real attempts (and failures) of the past.

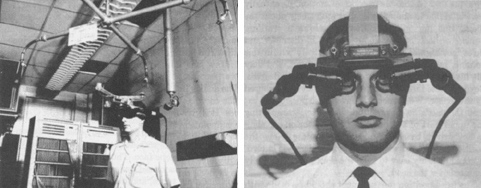

Before the 2010s, the XR industry was largely confined to government labs and defense contractor skunkworks - except for a brief consumer spell in the 90s. The Sword of Damocles, widely considered to be the first VR HMD, was developed by a team led by Ivan Sutherland at Harvard University in 1968. The Department of Defense-funded project was a culmination of research conducted by Ivan over several years, focused on the concept of using computers to both generate and interact with virtual environments (of particular relevance for military training).

The device's weight, and need to track head movements, required that it be suspended from the ceiling via a mechanical arm; in a sense, it was the "mainframe" of HMDs. While the graphics generated would be relatively simple wireframes at an exorbitant cost, Sutherland's team succeed in creating the first head-tracked VR HMD.

Sutherland would later move west, where he would join the University of Utah research staff and co-found a computer graphics firm; his former students and employees would include the founders of Silicon Graphics, Adobe, and Pixar.

From 1968 to 1990, the emerging technology would continue to be explored within academia, government agencies, and the military: MIT would create the Aspen Movie Map, a digital recreation of the eponymous city that could be virtually navigated in a Google Street View-esque manner; Thomas Furness would pioneer the use of virtual interfaces for flight simulation-based training within the U.S. Air Force; and NASA would partner with artists and engineers to simulate space for astronauts-in-training.

The mid-80s would see the rise (and eventual fall) of VPL Research, one of the first commercial VR product companies, Founded by members of Atari's short-lived research lab, such as Jaron Lanier (who coined the term "Virtual Reality"), VPL would go on to develop and market the Eyephone (an early VR HMD) and other haptic devices to customers such as NASA.

The 90's brought the first attempts to break into the consumer market, via the failed Nintendo Virtual Boy and Sega VR HMD, along with moderately successful, arcade-focused devices from Virtuality. By the middle of the decade, shifting public (and investor) interest towards the emerging World Wide Web would contribute to hype around VR dying down; the industry would retreat back into the military training and academic research facilities from where it was birthed for the so-called "VR Winter."

That winter lasted until the early 2010s. Back in SoCal - Palmer Luckey would spend money earned from repairing phones and scrubbing boats to acquire old HMDs from Ebay, government auctions, and private collections, with the ultimate goal of using his learnings to develop a functional prototype that could unlock the seemingly limitless potential of VR.

While previous devices were limited by then-current technology, rapidly improving computing power, display/optics tech, and Inertial Measurement Unit sensors ("IMU'; electronics that enable features such as GPS-tracking) -in part driven by expanding smartphone-related manufacturing supply chains- would play a key role in facilitating rapid and cost-effective prototype iterating by Luckey. More importantly, it would mean theoretically affordable personal VR HMDs vs. the $100K+ (or more) priced devices of the past; this, in Luckey's perspective, would be critical to eventual consumer adoption.

Luckey would join the University of Southern California-affiliated Mixed Reality Lab as a part-time technician, where he would earn access to additional resources and expertise (including from former VR pioneer Mark Bolas) on his quest to resurrect VR. Within 12 months, the prototype that formed the foundation of the modern wave of virtual reality would be ready.

2012 was a pivotal movement for the nascent industry. By mere chance, legendary game developer John Carmack came across Luckey's documented attempts to build a VR HMD online. Carmack, who had been privately perusing current virtual reality developments, connected with Luckey, and was able to secure a prototype ahead of the now-defunct E3 video game conference. Luckey was already planning on launching a Kickstarter campaign to fund his project, and happily obliged; if anything Carmack's approval would boost his chances of success.

The media & publicity blitz following Carmack's demo of a VR-compatible version of Doom 3 at E3 2012 would alter the trajectory of Luckey's VR project, which would come to be known as Oculus VR. The original $250K campaign goal was reached within hours of launch, and total funds raised would hit $2.4M in a matter of weeks.

Within a year, Oculus would ship a developer-focused device (aptly named the DK1) for effectively $350 to early backers of the Kickstarter campaign; and not unlike the Altair 8800, the first kit was...a bit rough. The device was required to be tethered by cable to a PC system, had low resolution, lacked positional tracking to facilitate a sense of immersion, and featured software with limited functionality. And not unlike the Altair - nearly every device Oculus could make was purchased by enthusiasts and early adopters despite its flaws.

Oculus would ship an improved developer kit and then get acquired by Meta (formerly Facebook) in a 2014 cash and stock deal valued at +$2bn. Other firms began to take notice: Microsoft would announce their augmented reality-focused HoloLens project; Samsung and Google would separately launch headsets that utilized smartphones as displays; and both legacy PC suppliers such as HP, Lenovo, and Dell would announce separate projects.

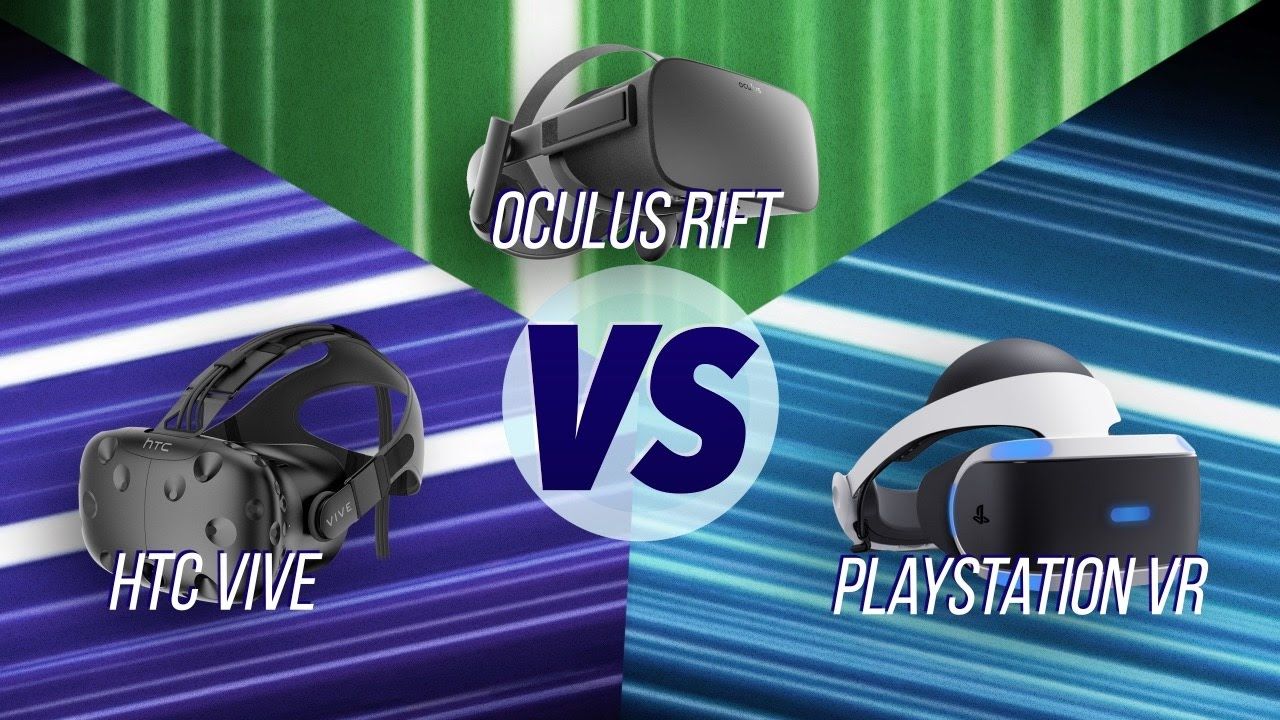

In a curious parallel of the 1977 Trinity, three major headsets would launch months apart in 2016 - marking the official beginning of "consumer-focused" VR: the HTC Vive, built in partnership with one-time Oculus partner Valve; Sony's gaming-centric PSVR; and Oculus' first consumer HMD, the Rift.

Various headsets of varying quality, price, target market, graphical fidelity, and intended uses would launch between 2016 and 2023. Oculus/Meta would develop standalone devices such as the GO and Quest line, which could be operated without a tethered PC (although at the cost of compute horsepower and a lower ceiling on graphic quality), for the lower end of the market, while firms such as Varjo would launch ultra high-end devices aimed at enterprise users for the purposes of VR-aided industrial design and flight simulation (among other use cases).

Yet, in spite of the promises of the potential of the medium, HMD devices have largely been confined to the enthusiast and gamer crowd. While precise metrics aren't readily available, Oculus/Meta is presumed to hold ~75% market share of the global HMD market, per the WSJ. Based on Quest product line internal sales data leaked in early 2023, this would imply a global installed base of ~26M; for the record, I don't believe including enterprise-grade headsets would meaningfully change that metric.

Examining available data (as of November 2022) on both free and paid apps on the Meta Quest Store, courtesy of Road To VR, the top 20 rated in either category are predominantly made up of games, gamified experiences, and social apps.

To be sure, there are exciting use cases emerging in enterprise training, education, industrial design, and even telerobotics (more on these later), but few standout system-selling, productivity-focused killer apps have emerged. The general consensus seems to suggest that a causal dilemma between compelling content and hardware-related issues, such as form factor, battery life, poor UI/UX, and VR-induced nausea, is the primary inhibitor to wider adoption.

While that argument is debatable, it's not difficult to see that what was intended to be a transformational technology feels woefully unfulfilled. And thus, not unlike the PCs of the late '70s, today's VR devices seem like mere novelties; or at best, solutions in search of problems.

So what’s next?

The Path to The Wired

The PC Revolution, despite its slow start and relatively humble beginnings, fundamentally changed the way we work, live, and play - forever transforming our relationship with technology. The XR industry will do the same on a grander scale while following a path that parallels that of PCs. In other words, viewing XR adoption via the paradigm of smartphones is erroneous - traditional laptops and workstations will be gradually replaced first and the adoption rate will be more deliberate.

The technology will be adopted at scale with the emergence of 10x productivity killer apps that utilize the advantages of 3D space, hardware that is optimized around or directly facilitates professional use cases, and commercial entities (from small businesses to large corporates) willing to subsidize VR solutions on behalf of employees for the purposes of supercharging productivity; doing so, again not unlike the PC, will indirectly expand the market for consumer use cases, and establish spatial computing as the next technological paradigm.

Predicting what exact shape or form the necessary killer app might be a total fool's errand - but I have a few ideas about where to look. I'm of the opinion that they'll emerge by focusing on one or more of the following concepts:

3D Spatial Design

Description

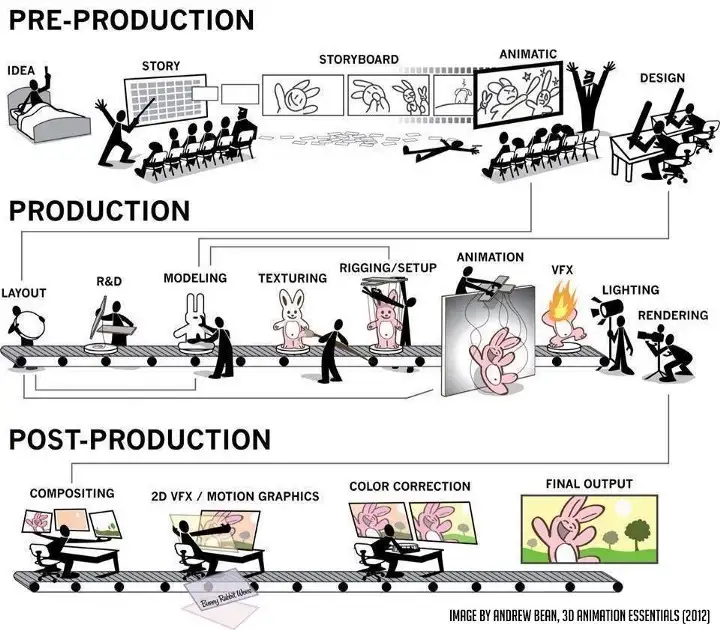

Defined as all core activities directly related to the conceiving, prototyping, modeling, and rendering, of three-dimensional representations of objects using XR hardware & software for either: direct consumption, such as video game (flatscreen & immersive) and CGI-powered film/television content; or indirect consumption, such as the use high fidelity 3D models created to facilitate the manufacture of physical objects or structures - as in industrial design, construction/architecture, fashion, etc.

Market Opportunity

Applying VR-native design tools directly into the spatial design/3D asset creation process is the most straightforward opportunity in my opinion. The current process utilizes unintuitive, oftentimes clunky 2D interfaces and a fragmented ecosystem of software packages with varying learning curves.

These core issues are compounded by factors such as non-interoperable open and proprietary file formats, disjointed workflows between designers/artists <> programmers, and increasing demand for higher fidelity content in the face of talent supply constraints. Gaming and Film Production, two verticals with global end markets collectively worth ~$272Bn in 2021, alone would be lucrative markets; consider that, when taking into account capital spent on 3D modeling software & hardware along with labor costs, industry participants collectively spent ~$30Bn in the same year on 3D asset creation-related activities.

Assuming demand for 3D assets continues to grow (it will), there exists an opportunity for 3D-native spatial design solutions that take advantage of a core XR-strength - the ability to create, craft, and interact with 3D objects using a medium native to the object itself.

Sculpting, modeling, painting, and detailing as if one were an artist with an endless canvas, while abstracting away cluttered menus and dropdown boxes has the potential to supercharge the 3D asset creation process (possibly in conjunction with emerging tools such as generative AI).

Challenges

Any 3D-native design alternative would have to contend with the fact that 2D-native solutions have directly benefitted from decades of hyper-optimization and established workflows within larger studios and firms. It's likely that success will require integration into existing processes and a "cross-platform" approach that accounts for tablets and laptops, along with HMDS - at least initially.

Additionally, existing hardware solutions can either be too costly and/or limited in comfort and functionality needed for longer creation sessions. Questions exist around what the proper input modalities should be; for example, are gaming-centric controllers ideal for spatial design? Or is there an opportunity to experiment with hand/eye tracking?

Current Examples

Bezel, Gravity Sketch, ShapesXR, Open Brush, Vermillion, Figman XR, Quill, Substance Modeler, Polysketch, Omniverse (Nvidia).

Telepresence

Description

Defined as the usage of XR technology to generate the sense of being physically present in remote or virtual environments for the purposes of communicating, engaging, and interacting with people or things in a manner consistent or in-near parity with natural, in-person interactions; use cases would include more effective remote collaboration, meetings, site visits, education, travel, exploration

Market Opportunity

The remote work/WFH experiment catalyzed by the Covid Epidemic, while temporarily increasing the fortunes of video conferencing providers, laid bear the limitations of using existing remote communication and collaboration tools when entirely divorced from IRL/in-person interactions. Friction related to onboarding, planning meetings, technological hiccups, and limited ability for human expression (tone, body language, etc.) can make building cohesive team dynamics, mentoring junior employees, and facilitating spontaneous interactions very difficult; this is particularly pronounced with regard to firms and industries with limited experience or structural capacity for remote-first models.

Tapping into the ability to replicate the sense of presence felt when engaging in communication within an immersive, virtual space (while hard to convey without direct experience) is key to providing an effective, long-term solution to managing distributed teams and workforces. Additionally, there are a few interesting emerging use cases in telerobotics, such as using VR HMDs to remotely control robotic submersibles.

The opportunity is certainly not insignificant either - the video teleconferencing market's value was estimated to be $6.3Bn at the end of 2021. That said, the greater opportunity, from an enterprise perspective, would be to capture the cost savings associated with reducing commercial travel expenses ($322.4Bn in the US alone), employee commute (on average 330 hours a year), and commercial real estate-related expenditures.

Challenges

While the quoted numbers above are eye-opening, it's likely that XR-based telepresence solutions would have a gradual impact on reducing travel and physical footprint-related expenditures. Many businesses do require physical presence (such as hospitality, medicine, and construction), and there exists uncertainty around the importance of high-fidelity representations of humans in a virtual space vs increasingly abstract avatars in effective communication using VR technology. The ability to collaborate on work-related matters will likely require additional UX/UI-related considerations. For example, is whiteboarding using a virtual flat screen or a representation of an actual whiteboard additive or distracting? HMD onboarding and usage friction relative to flatscreen video conferencing solutions are relevant barriers to adoption as well.

Examples

Horizon Workrooms, Microsoft Mesh, Spatial, Glue VR, MeetinVR, Rumii.

Simulations

Description

Defined as the use of XR technology to create digital objects, environments, and/or scenarios that replicate physical reality or imagined experiences for the purposes of training & education, experimentation, visualization, and/or problem-solving.

Market Opportunity

While seemingly more ambiguous, simulation-based use cases are arguably one of the first productivity-focused applications of VR given its history as a tool for military training. Within an enterprise context, VR-based training has been adopted to varying degrees in recent years. Per a survey conducted in 2022 by PwC, 51% of companies are in the process of integrating VR in into training workflows or already have. The same study concluded that VR trainees were 4x faster to train than via traditional classroom-based techniques. With the cost of corporate training in the U.S. surpassing $100bn in 2022, the value proposition for immersive, simulated training is very clear.

Using VR to simulate virtual monitors and effectively "travel" with your home office could also be useful, even if it's "low hanging fruit" relative to other simulation-based uses and entirely dependent on screen resolution quality and HMD form factor (especially for long work sessions).

Personally, I believe there's an even larger opportunity within traditional education. While still untested at scale, the usage of simulated environments for expanding the classroom can fundamentally transform what the future of school looks like. Beyond the proverbial "visit to ancient Rome", VR can be used to simulate mechanical workshops, chemistry labs, physics-based "playgrounds", and biology labs without adhering to physical or budgetary constraints - possibly doing more to democratize access to education for millions of children/young adults globally than the Internet has (or attempted to).

Challenges

Restructuring corporate and education curriculums that have been established for decades (or longer) is no simple task and will likely require sufficient supporting data and buy-in from multiple stakeholders over the course of years to justify adopting simulation-based approaches. Additionally, existing device form factor and comfort may reduce the total pool of participants who would benefit on a net basis from virtual training and education. It's also possible that adopters that are already financially equipped will be able to integrate these solutions before others that may exhibit a greater need for cost-effective hardware & software.

Examples

Strivr, Virtual Desktop, Immersed, VSpatial, NeuroRehabVR, OssoVR.

Extrapolating future predictions based on past precedents can easily turn into an exercise in pattern (over)matching, and lead to unreasonable conclusions. While the modern XR industry has its roots in tinkering/modding culture, the space became dominated by larger corporates much faster than the PC industry did, and the emergence of a killer app (with VisiCalc) in that industry took place much sooner in the lifecycle. Traditional PC usage was also not entirely detached from 70s-era typewriters and office equipment vs the total sensory immersion involved with putting on an HMD; few users became motion sick from staring at CRT monitors.

Today, XR still feels like an industry in search of problems and is largely seen as a toy by the general public. It has yet to experience its "IBM PC Moment", although that may change in a matter of days and weeks courtesy of one of the original PC Revolution pioneers. It's possible that even Apple can't change the perceptions, justified or unreasonable, of an industry with the potential to transform our relationship with technology.

That said, I can't quite shake the feeling that we are on the verge of what comes next...

Member discussion